Repeated Measures T-Test

A repeated measures or paired samples design is all about minimizing confounding variables like participant characteristics by either using the same person in multiple levels of a factor or pairing participants up in each group based on similar characteristics or relationship and then having them take part in different treatments. Matched subjects is another word used to describe this kind of test and it is used specifically to refer to designs in which different people are matched up by their characteristics. Participants are often matched by age, gender, race, socioeconomic status, or other demographic features, but can also be matched up on other characteristics the researchers might consider possible confounds. Twin studies are a good example of this kind of design; one twin has to be matched up with the other – they can’t be matched to someone else’s twin.

To reiterate the differences between a repeated measures t-test and the other kinds of tests you may have learned up to this point, a single sample t-test revolves around drawing conclusions about a treated population based on a sample mean and an untreated population mean (no standard deviation). An independent sample t-tests are all about comparing the means of two samples (usually a control group/untreated group and a treated group) to draw inferences about how there might be differences between those two groups in the broader population. Different, randomly assigned participants are used in each group. Related samples t-tests are like independent sample t-tests except they use the same person for multiple test groups or they match people based on their characteristics or relationships to cut down on extraneous variables which may interfere with the data. Continue reading

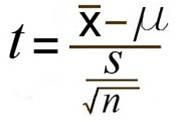

with the bottom portion referring to the estimated standard error. You may see this written as sM instead.

with the bottom portion referring to the estimated standard error. You may see this written as sM instead.